A review of the evidence base for Risk-Need-Responsivity principles was recently conducted by a team of researchers from the University of Oxford (UK), University of Konstanz (Germany), and Iowa State University (US). The article, “An updated evidence synthesis on the Risk-Need-Responsivity (RNR) model: Umbrella review and commentary” was written by Seena Fazel, Connie Hurton, Matthias Burghart, Matt DeLisi, and Rongqin Yu, and published in the Journal of Criminal Justice (Vol. 92, May ー June 2024). The authors indicate that RNR may lack adequate support for its viability, citing mixed-quality evidence that lacks transparency and shows authorship bias, and prior reviews of the evidence being rated low quality.

Background and Research Purposes

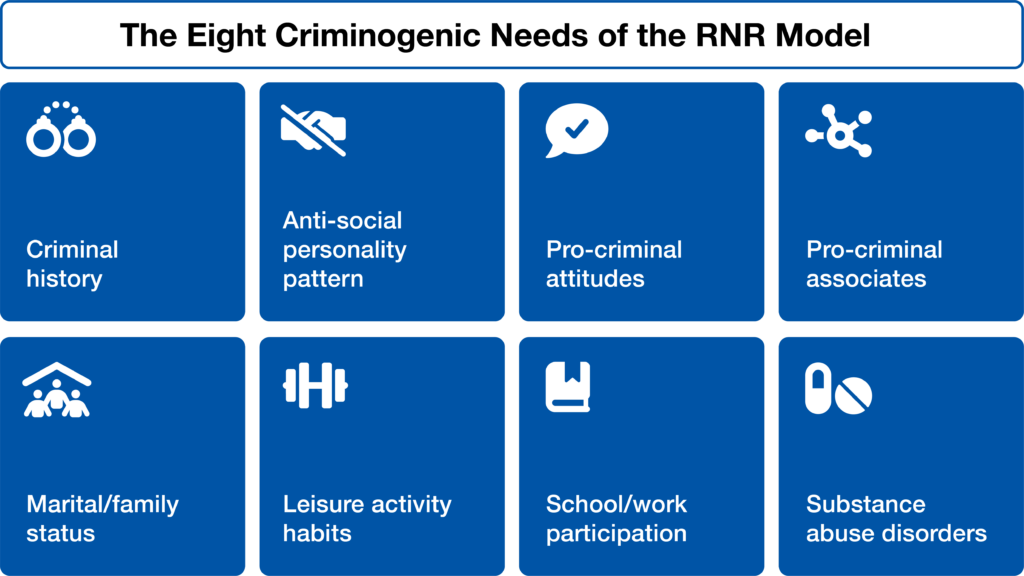

First articulated as a formal model for managing incarcerated individuals in 1990 – and having since become a widely accepted model in correctional settings throughout the world – RNR is built upon three key principles:

- Risk: This forms the basis of the “treatment matching” or “targeting” approach to intervention, stating that those at higher risk of re-offending should receive more intensive treatment.

- Need: This principle looks at criminogenic needs to determine an offender’s risk of recidivism.

- Responsivity: Having assessed an offender’s risk of recidivism based on their needs, the model articulates two components for reducing that risk: general responsivity (which relies on cognitive-social learning methods) or specific responsivity (which attempts to tailor treatment to an individual).

The RNR model is considered to have a solid evidence base built on primary studies, systematic reviews, and meta-analyses across its three principles, which has led to its popularity in comparison to other models. In this Journal of Criminal Justice article, however, the researchers observe that this reputation is in conflict with certain characteristics of the evidence base, including how its level of dispersal creates challenges for proper assessment, or that many existing published syntheses are focused on a single principle rather than the model in total, and are also now more than a decade old.

To answer these concerns, the researchers have conducted an “umbrella review” that collects the distinct segments of the evidence base and reviews their overall quality and consistency.

“Umbrella reviews are increasingly used as a validated, systematic and transparent approach to provide information to researchers and practitioners in areas where there is a large body of evidence of varying quality and displaying mixed results.”

Methodology

Data Search

The researchers employed a multifaceted approach to gathering the different studies and reviews that are part of the RNR evidence base. This included tailored keyword searches of electronic databases PubMed, PsycNet, and Scopus, as well as a search for literature produced outside of traditional publishing via Eldis, Google Scholar, and FindPolicy.

Eligibility Assessment

While no studies were excluded on the basis of language (ie, English or non-English) or publication type, the researchers did adopt specific criteria in terms of determining eligibility of reviews for each principle, as follows:

- Studies of risk were deemed eligible if they “compared post-treatment recidivism outcomes for high and low-risk populations.

- Studies of need were deemed eligible if they “assessed the predictive accuracy of one or more risk assessment tools for recidivism outcomes or…directly assessed a treatment program reflecting the need principle.”

- Studies of responsivity were deemed eligible within the distinct general and specific categories: General responsivity studies needed to “compare recidivism outcomes for treatment/intervention adhering to general responsivity with those not adhering to the principle,” while specific responsivity studies needed to review “the association between one of the model's eight specific responsivity factors and either treatment completion rates or recidivism outcomes.”

Study Selection

Studies accessible through database searches underwent a title check, abstract screening, and full-text review, while inaccessible studies were evaluated through direct contact with their author or institution. The researchers ultimately identified 26 separate meta-analyses published between 2002 - 2023 that encompassed over 450 different studies. These included 7 studies on risk, 6 on need, 15 on general responsivity, and 4 on specific responsivity.

Data Extraction

Relevant research data was gathered from the selected studies with a standardized form, and efforts were made to gather information on these particular variables within each study:

- Demographics

- Sample

- Methods

- Effect size and metric, and upper/lower confidence intervals

- Measures of heterogeneity and variation between different studies

Quality Assessment

The researchers adopted an existing approach to umbrella reviews, in which they scored the quality of each study on a seven-point scale, each point corresponding to an existing assessment tool or other validated measure:

- A score of at least 8/16 on the Assessing Methodological Quality of Systematic Reviews-2 (AMSTAR) tool

- A lower-risk score of at least 2/4 on the Bias in Systematic Reviews (ROBIS) tool

- Excess significance bias ratio of <1 (comparing a meta-analysis’ pooled overall effect size and the effect size of its largest included study)

- Between-study heterogeneity of <50%

- Sample size of ≥1000

- A prediction interval of 95% (not including those that include the null effect)

- A score of no more than 5% on Egger’s regression asymmetry test, which looks at evidence for publication bias

Studies of the Need principle were exempt from assessment in the AMSTAR tool, and were therefore only scored to a maximum of six points. The researchers’ quality scale classified studies rated 0-2 as low, 3-4 as moderate, and 5-7 as high.

Findings and Interpretations

Risk

Among the seven studies identified for the Risk principle, the researchers found that none achieved a quality score greater than 2/7, indicating that all were rated low quality. These results came from low methodological quality scores in AMSTAR and ROBIS, as well as missing information on factors such as publication bias. A majority of these studies showed lower confidence intervals, and most had overlapping samples, with one of those samples that did not overlap showing potential authorship bias.

Need

The six selected studies for the Need principle included those that evaluated treatment programs directly answering the identified need and those that evaluated risk assessment tools. Within the latter, some data had to be excluded for bias, as the tools had been developed by the study’s author(s). Overall, the quality of these studies was considered mixed; the studies that directly addressed need achieved scores of 0-2, while those studies that examined risk assessment tools held moderate-to-high (3-7) quality scores.

General Responsivity

The 15 studies examined by the researchers were determined to be mixed in quality – while only 4 studies out of 15 received AMSTAR scores lower than 8 or ROBIS scores lower than 3, other factors such as overlapping samples and potential authorship bias needed consideration. Overall, the assessment indicated eight studies of low quality, two of moderate quality, and five of high quality.

Specific Responsivity

Specific responsivity has received significantly fewer meta-analyses that the researchers deemed eligible for inclusion. The four included in their review reported on outcomes that ranged from characteristics affecting attrition in treatment programs, whether such programs had shown an effect on recidivism rates, and whether treatments employing specific responsivity principles caused lower recidivism. The nature of this data meant that the researchers needed to rely on AMSTAR and ROBIS scoring to assess quality, and they found that these four meta-analyses were of low-to-moderate quality.

Conclusions

The final assessment of the researchers was that there was inconsistency within the evidence base to support the validity of RNR principles, and that the underlying systematic reviews showed poor quality and significant gaps in data. They further outlined five crucial challenges identified within the reviewed studies:

- Authorship bias

- A lack of transparency and accessibility

- Primary studies of poor quality

- Flaws in subgroup analysis methodology

- The conflation of prediction with causality

“In light of the documented allegiance effects in intervention and prediction research, it is notable that many of the included reviews did not address or disclose potential conflicts of interest. This is particularly important when there are potential financial conflicts of interest.”

The concerns raised by this umbrella review call into question the reliability of the studies that were testing the RNR model, and therefore the conclusions reached about the model’s effectiveness in correctional settings. Until higher quality research is presented to confirm the impact of RNR and dispel doubts about its theoretical validity, the researchers feel that the model should no longer be introduced to additional jurisdictions.

Source: The prevalence and comorbidity of mental health and substance use disorders in Scandinavian prisons 2010–2019: a multi-national register study (Anne Bukten, Suvi Virtanen, Morten Hesse, Zheng Chang, Timo Lehmann Kvamme, Birgitte Thylstrup, Torill Tverborgvik, Ingeborg Skjærvø & Marianne R. Stavseth) https://www.sciencedirect.com/science/article/pii/S0047235224000461?via%3Dihub